How can you make your computationally intensive application run like lightning on the cloud? Use Cloud Parallel Processing!

Some computational tasks are so demanding that a single machine cannot process them within a reasonable amount of time. Applications that leverage massive amounts of data from the Web, a social media network or any other large-scale system can easily overwhelm a single machine. Many other examples exist of analytical tasks that operate on large data sets.

Parallel processing has been automated and widely practiced at the processor level. In order to utilize the cloud efficiently it is necessary to scale out. Scaling out means that multiple nodes share the workload and therefore get things done faster. Parallel processing at the application levelis necessary to harness the power of the cloud.

Creating a Scalable and Elastic Application

With the advent of cloud computing a large-scale and virtually unbounded grid of compute nodes becomes a reality. In principle, this makes the cloud an ideal substrate for on-demand high-performance parallel processing. The cloud can adapt elastically when CPU requirements change and spin-up or tear-down nodes accordingly.

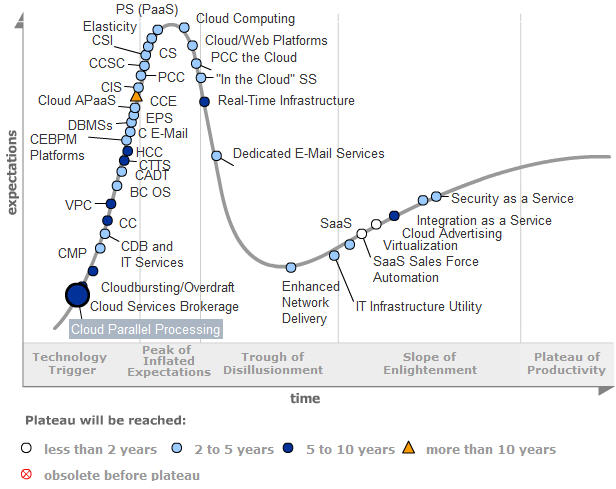

In the latest Gartner Hype Cycle for Cloud Computing (July 2010), Cloud Parallel Processing is an entrant of the Cloud technology adoption curve. They note that “as cloud-computing concepts become a reality, the need for some systems to operate in a highly dynamic grid environment will require these techniques to be incorporated into some mainstream programs. [] The application developer can no longer ignore this as a design point for applications.”

Parallel Programming is hard!

This points to the caveat of parallel processing: it is very hard to build scalability and elasticity into general purpose applications. But if you cannot make multiple nodes share the workload then the cloud will not help you get things done faster.

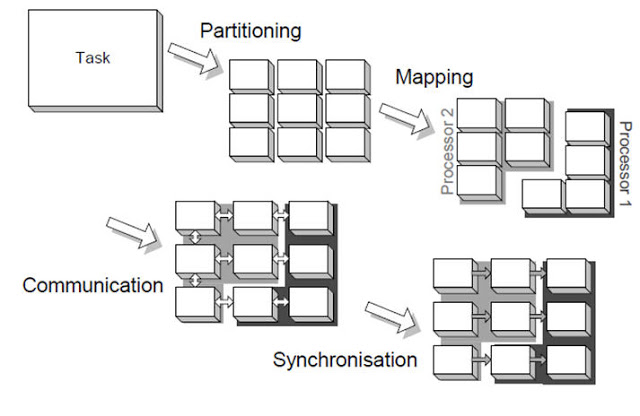

How can you make your computationally intensive application run in parallel on several nodes? A parallel programmer must address these steps:

- Partition the workload into smaller items

- Map these onto processors or machines

- Communicate between these subtasks

- Synchronize between subtasks as required

Note that synchronization is a real bugger – because it is anathema to parallelism. Yet it is unavoidable in most applications.

Shift of Programming Model

Even with the use of toolkits that offer communication and synchronization primitives (such as PMI) parallel programming is a bit like rocket science. Without a shift in programming model parallel programming will stay elusive. Programmers will have to deal with parallelism to some degree at least – they can no longer rely on middleware, databases and operating systems to exploit parallelism.

Chris Haddad mentions the following programming model shifts that happen with the cloud:

- Actor Model: Dispatching and scheduling instead of direct invocation Queues and asynchronous interactions

- RESTful interactions Message passing instead of function calls or shared memory

- Eventual consistency instead of ACID

Fortunately, parallel programming has been a research topic for some decades already – long before the cloud existed. Demand for high performance computation stemmed from various domains, such as weather prediction, finite element simulation and other data analysis. During my PhD I worked on object-oriented parallel programming using COTS (commercial-off-the-shelf) workstation clusters. These are called distributed memory machines in parallelism jargon. The cloud is nothing else than a distributed memory machine – albeit at a much larger scale. And my work also touched the Actor model that Chris mentioned.

Watch this space

The principles and models that apply to distributed memory machines would also apply to the cloud (essentially, the cloud is a very large distributed memory machine). In a follow-up series of posts I will look at those topics in more detail and examine how they can be applied to Cloud Parallel Processing. I’ll dig deeper into programming models shifts (such as Actors) and outline some parallel algorithms (such as map reduce, n-body and others of the 13 computational dwarfs).

Leave A Comment